Python’s reputation as a powerful and versatile programming language is bolstered by its array of tools, notably generators, iterables, and iterators. These features are integral to Python’s design philosophy, emphasizing readability, efficiency, and simplicity. Despite their widespread usage, a deeper understanding of these concepts is often not fully realized by many developers, leading to untapped potential in their coding practices.

Generators, iterables, and iterators represent more than just functionalities or syntactic conveniences; they embody a fundamental approach to handling sequences and data streams in Python. Generators, with their `yield` mechanism, allow for the creation of items on-the-fly and are especially useful in scenarios where generating all items at once is not memory-efficient or practical. This lazy generation of items ensures that memory usage is optimized, as values are produced only when needed. By integrating generators into their code, developers can handle large datasets or infinite sequences with minimal resource consumption. Iterables and iterators, on the other hand, provide a standardized way to traverse through data. Whether it’s a list, a file, or a custom data structure, the ability to iterate over these objects is a cornerstone of Python programming. Creating custom iterables can lead to more readable and maintainable code, as it allows encapsulating complex iteration logic within objects. This encapsulation aligns with Python’s object-oriented nature, promoting code reusability and modularity.

Developing a solid grasp of these concepts is not just about leveraging Python’s features; it’s about adopting a more Pythonic way of thinking. This involves writing code that is not just functional but also clear and elegant. Python’s emphasis on readability and simplicity doesn’t just make the language accessible to beginners; it also allows experienced programmers to express complex ideas in a straightforward and intuitive manner. In essence, mastering generators, iterables, and iterators enhances coding skills in Python by fostering a deeper understanding of the language’s capabilities and design principles. It empowers developers to write more efficient, effective, and elegant code, fully harnessing the power of Python in their programming endeavors.

Exploring Iterables

In Python, one of the core concepts that beginners encounter is the ability to iterate over collections such as lists, tuples, and dictionaries. This foundational feature of Python is not only intuitive but also highly versatile, as it can be applied to various data types, allowing for straightforward traversal and manipulation of data structures.

Consider the simplicity of iterating over a list:

```python

var = [1, 2, 3]

for element in var:

print(element)

```In this example, the `for` loop sequentially accesses each element in the list `var`, printing its value. The ease and clarity of this syntax are one of the reasons Python is lauded for its readability and ease of use. The iteration process isn’t confined to just lists; it seamlessly extends to other data types, such as strings:

```python

var = 'abc'

for c in var:

print(c)

```Here, the same iteration concept is applied to a string, treating each character as an individual element. This universality of the iteration syntax across different data types showcases Python’s consistency in design, making it easier for programmers to work with a variety of data structures. The concept of iterables is fundamental in these examples. In Python, an iterable is any object that can be looped over, or more technically, any object that implements the `__iter__` method, which returns an iterator. This iterator then allows the object to be traversed one element at a time. This raises a fascinating possibility: the creation of custom iterables.

Custom iterables can be created by defining classes that implement the `__iter__` method. This allows for the creation of objects that can be iterated over in a customized manner, defining what elements are returned during the iteration process. For instance, a class could be created that iterates over a complex data structure, or generates a sequence of numbers based on specific rules. The concept of iterating over collections in Python is not just a fundamental skill but also a gateway to more advanced programming techniques. It introduces the concept of iterables, leading to the exploration of creating custom iterables, which opens up a whole new realm of possibilities in Python programming. Whether working with built-in data types or custom-defined structures, the ability to iterate over elements is a powerful tool in any Python programmer’s toolkit.

The `__getitem__` Method in Action

To create custom data types, defining classes is the standard approach. Consider a simple class `Arr`:

```python

class Arr:

def __init__(self):

self.a = 0

self.b = 1

self.c = 2

```Attempting to iterate over an instance of this class using a `for` loop initially results in a TypeError, as Python doesn’t inherently know how to traverse the object. To make `Arr` iterable, the `__getitem__` method is implemented:

```python

class Arr:

# ... previous code ...

def __getitem__(self, item):

if item == 0:

return self.a

elif item == 1:

return self.b

elif item == 2:

return self.c

raise StopIteration

```Now, iterating over an instance of `Arr` works as expected, similar to iterating over a list or tuple. The `__getitem__` method allows accessing elements in sequence, and raising `StopIteration` indicates the end of the sequence.

Crafting a Word-by-Word Iterable

The `Sentence` class presents an excellent example of custom iterables, showcasing Python’s flexibility and object-oriented capabilities. By splitting a string into words and allowing iteration over these words, the class demonstrates a practical application of custom iterables in processing and analyzing textual data.

In this class, the constructor (`__init__`) takes a string and splits it into words, storing them in the `words` attribute. The `__getitem__` method then allows for the retrieval of these words one by one, making the class iterable. When used in a loop, `Sentence` enables easy iteration over each word in the provided text, as shown in the implementation:

```python

class Sentence:

def __init__(self, text):

self.words = text.split(' ')

def __getitem__(self, item):

return self.words[item]

```This approach to creating a custom iterable is straightforward and effective for basic text processing tasks, such as word count or simple text analysis. However, the simplicity of the `Sentence` class also brings to light certain limitations. One significant limitation is its handling of punctuation. The class naively splits the text by spaces, without considering punctuation marks, which could lead to inaccuracies in word counts or text analysis. For instance, words followed by commas or periods are treated as distinct from the same words without punctuation.

To enhance the `Sentence` class, additional text processing logic could be implemented. This might involve using regular expressions to split the text more accurately, handling different types of whitespace and punctuation, or even integrating natural language processing (NLP) techniques for more sophisticated text analysis. Despite its limitations, the `Sentence` class is a valuable exercise in understanding custom iterables in Python. It demonstrates how easily Python can be adapted to specific data processing needs, and how basic classes can be expanded and refined for more complex applications. The class serves as a foundation upon which more advanced text processing functionalities can be built, exemplifying the iterative nature of software development and the power of Python’s iterable protocol.

Key Takeaway

To create an iterable in Python, the essential requirement is a `__getitem__` method that retrieves elements using a zero-based index. This method can work seamlessly if backed by a list, as in the `Sentence` example. For custom implementations like `Arr`, careful definition of element sequence is necessary. This exploration highlights the versatility and power of iterables in Python, demonstrating how they can be tailored for specific needs, thereby enhancing the capabilities and efficiency of Python programming.

Dynamic Element Generation in Python

Python’s tools like generators, iterables, and iterators are incredibly versatile. This section explores the concept of generating elements dynamically, moving beyond predefined elements at the time of class instantiation.

Generating Random Numbers On-Demand

The `RandomNumbers` class is an intriguing example of how Python’s iteration capabilities can be harnessed to create dynamic and potentially infinite data streams. By leveraging the `__getitem__` method, this class continually generates random numbers, showcasing the flexibility and power of custom iterable objects in Python.

In this class, each call to `__getitem__` results in the generation of a random number between 0 and 10. This behavior epitomizes the essence of Python iterables — an object that can produce a sequence of values. When incorporated into a loop, `RandomNumbers` can yield an unending stream of random numbers, illustrating how custom iterables can be used to create dynamic data sources.

However, this infinite generation of data highlights a crucial aspect of working with iterables: the need for a termination condition. In its current form, iterating over an instance of `RandomNumbers` without any constraints would result in an infinite loop. To address this, implementing a stopping condition within the `__getitem__` method is essential. For instance, raising a `StopIteration` exception when a specific condition is met — such as generating a particular number or reaching a predetermined count of iterations — can elegantly terminate the iteration.

Adding such a stopping condition transforms the `RandomNumbers` class from a generator of endless random numbers into a more controlled and manageable data source. This modification not only prevents potential runtime issues but also aligns the class’s behavior with typical iteration patterns in Python, where sequences have a defined beginning and end. The `RandomNumbers` class serves as a valuable illustration of custom iterables in Python, demonstrating their potential to create dynamic sequences. It also underscores the importance of considering termination conditions in iterable design, ensuring that the iteration process is both practical and aligned with Python’s iteration protocols.

Real-World Applications of On-Demand Generators

This dynamic element generation technique is particularly useful in contexts like signal acquisition from devices that generate sequential values, such as camera frames or analog readings. It’s a concept that will be further explored in tutorials focused on device interfacing.

Another common example is file handling in Python. When opening a file, Python doesn’t load the entire content into memory but allows iteration over each line:

```python

with open('file.txt', 'r') as f:

for line in f:

print(line)

```This approach enables efficient handling of large files that exceed memory capacity.

Understanding Iterators

The exploration of iterable objects using the `__getitem__` method in Python provides a foundational understanding of how to traverse through collections. However, this is just the tip of the iceberg when it comes to Python’s iteration protocol. Beneath the surface lies a more nuanced mechanism, involving the interplay between iterables and iterators, each serving a distinct purpose in the iteration process.

Iterables are objects that can be looped over, such as lists, tuples, or custom objects that implement the `__getitem__` method. However, the true magic of iteration in Python is handled by iterators. An iterator is an object that abstracts the process of iterating over a collection, keeping track of the current position. This is achieved through two key methods: `__iter__`, which returns the iterator object itself, and `__next__`, which retrieves the next item in the sequence and updates the current position.

This distinction between iterables and iterators is subtle but significant. While an iterable defines a collection of items that can be iterated over, an iterator is the mechanism that actually performs the iteration. When a `for` loop or similar construct iterates over an iterable, it internally converts the iterable into an iterator through the `__iter__` method. The loop then repeatedly calls `__next__` on the iterator to retrieve each item until a `StopIteration` exception is raised, signaling the end of the sequence. Understanding this underlying mechanism is crucial for advanced Python programming. It not only helps in creating more efficient and robust iterable objects but also aids in understanding Python’s iteration model, which is a key part of the language’s design philosophy. This knowledge empowers developers to leverage Python’s full potential in managing collections and data streams, enhancing the functionality and readability of their code.

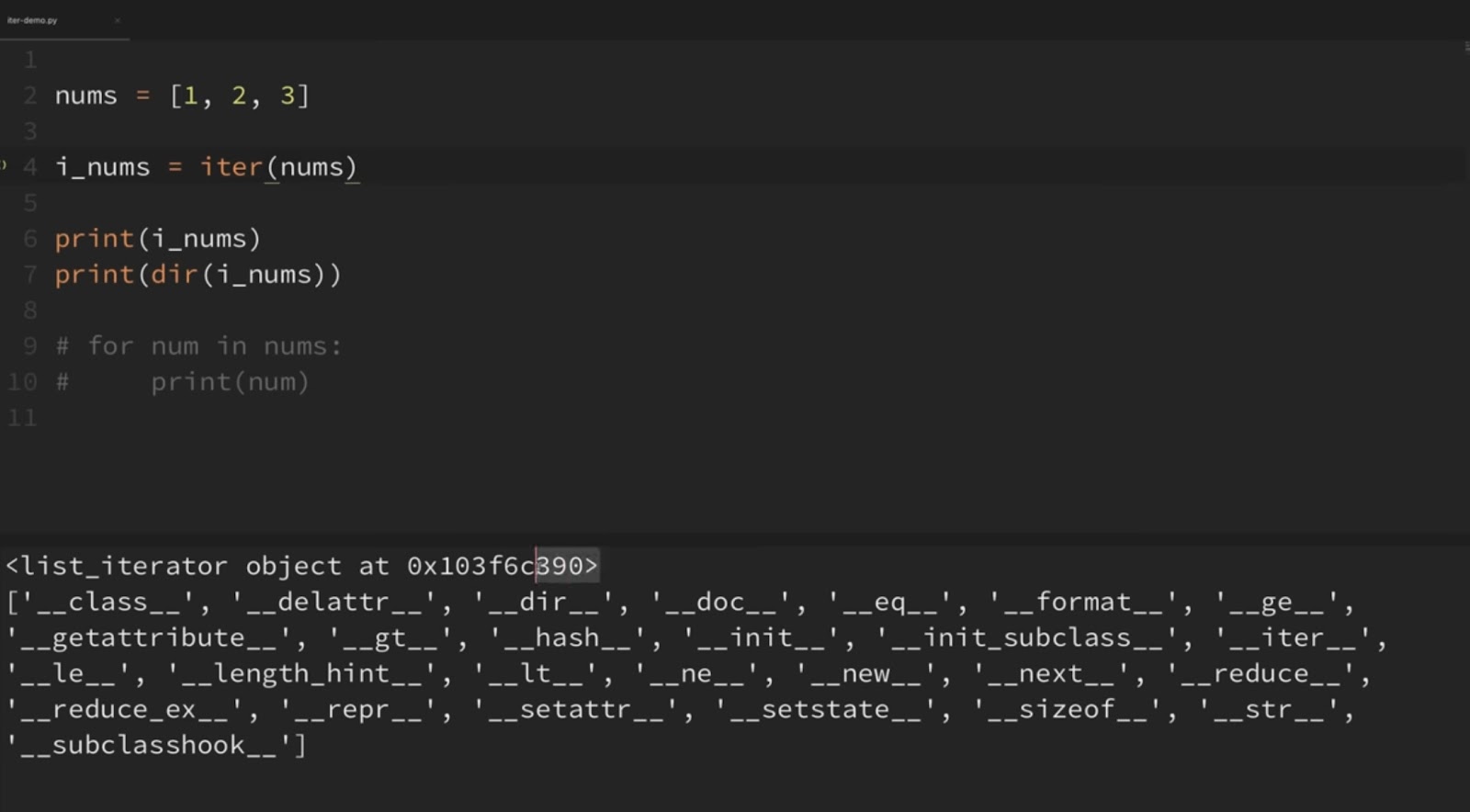

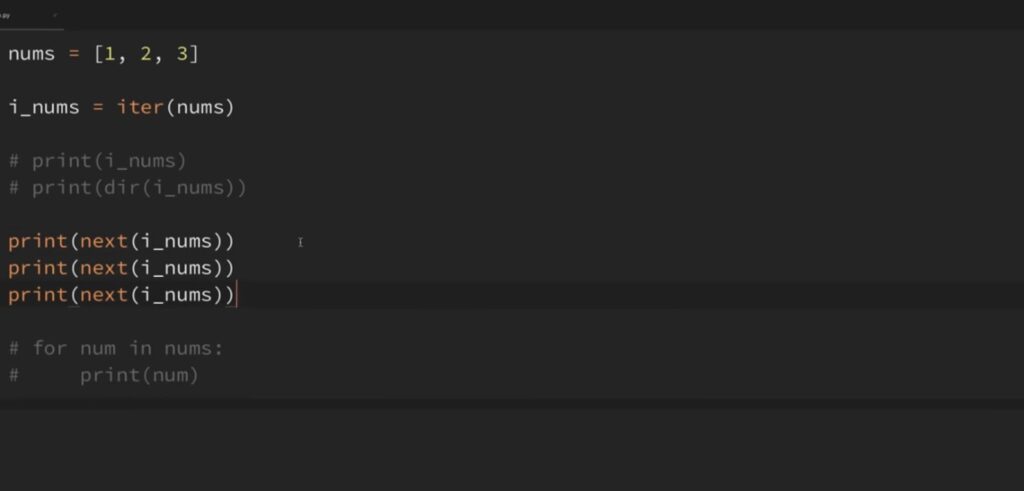

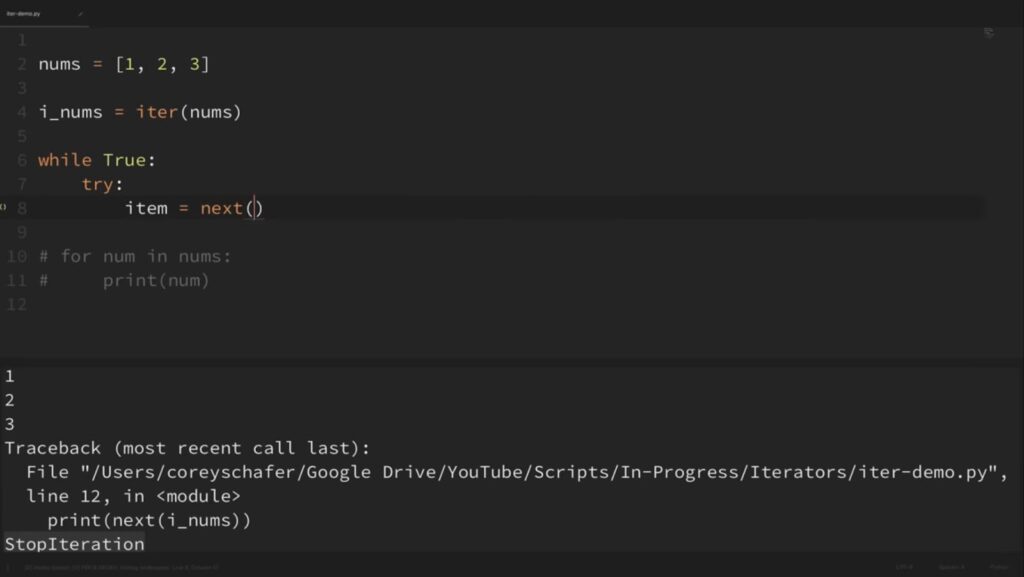

Iterators in Action

An iterator is what Python uses to keep track of the position during iteration. Using the built-in `iter` function, one can create an iterator from an iterable:

```python

var = ['a', 1, 0.1]

it = iter(var)

next(it) # Returns 'a'

next(it) # Returns 1

```When the iterator is exhausted, it raises a `StopIteration` exception.

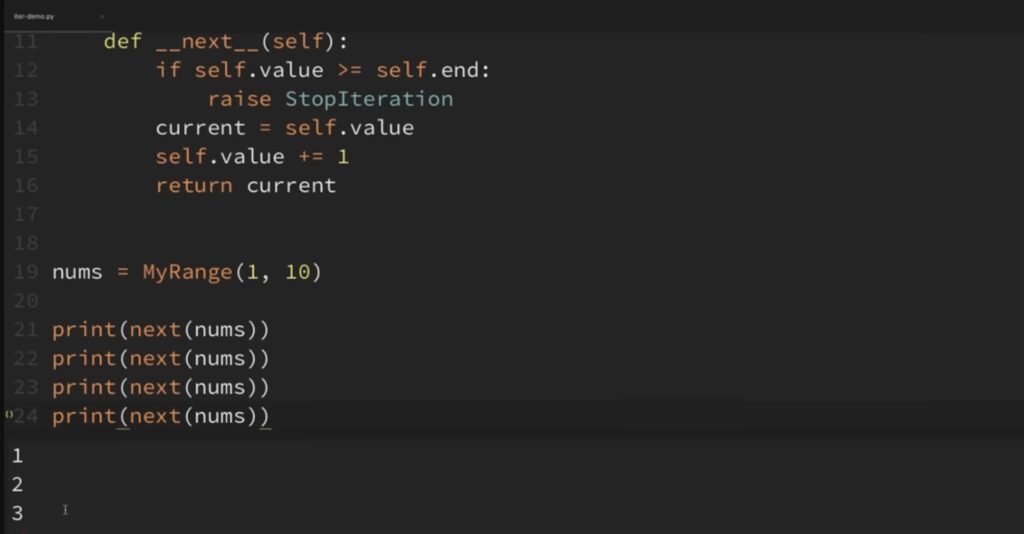

Custom Iterator for a Class

Custom iterators can be defined for a class, as seen in the modified `Sentence` class, which iterates over words in reverse order:

```python

class SentenceIterator:

# ...

class Sentence:

def __init__(self, text):

self.words = text.split(' ')

def __iter__(self):

return SentenceIterator(self.words)

```With this setup, the iteration order is reversed, but direct indexing is not supported without a `__getitem__` method. Adding this method allows for both indexed access and reversed iteration.

Separating Iterator and Iterable

It is generally advisable to separate the iterable and iterator functionalities, especially in scientific contexts where different iterator behaviors might be required based on certain parameters.

The Role of Generators

In many scenarios, defining a separate iterator is unnecessary, as generators can be directly used in the `__iter__` definition. Generators simplify the creation of iterators, making the code more concise and readable.

In conclusion, Python’s approach to creating elements dynamically through iterables and iterators is a powerful feature that facilitates efficient data processing, particularly in large-scale or real-time applications. This method of element generation enhances the flexibility and scalability of Python programs.

Harnessing Generators with the `yield` Keyword

In the realm of Python programming, generators are a potent yet often underutilized feature, holding the key to more efficient and innovative coding practices. Their true potential unfolds in scenarios demanding the generation of extensive or infinite sequences of data, where traditional methods fall short due to memory constraints. The essence of generators lies in their use of the `yield` keyword, which distinguishes them significantly from conventional functions using `return`.

The power of generators is most evident when dealing with tasks that require creating long or potentially infinite sequences. For example, generating a sequence of numbers that continues indefinitely is simply not feasible with standard data structures due to memory limitations. However, generators elegantly circumvent this issue by producing values on the fly and yielding them one at a time. This approach drastically reduces memory usage, as only the current value needs to be stored at any given moment. Generators also bring a new level of efficiency to Python programming. By suspending their state after each `yield`, they can resume where they left off, thereby avoiding the overhead of starting from scratch with each iteration. This feature is particularly beneficial in scenarios involving iterative data processing, complex calculations, or algorithmic data generation, where restarting the process for each new value would be computationally expensive.

Despite these advantages, generators are not as widely employed as they could be. This underutilization may stem from a lack of awareness or understanding of their capabilities and benefits. Learning to harness the power of the `yield` keyword can open up new avenues in programming, allowing developers to write more efficient, scalable, and cleaner code. Generators are a valuable yet under-exploited asset in Python’s toolbox. They offer a memory-efficient way to handle large data sequences and bring a new level of computational efficiency to Python code. As developers gain a deeper understanding of generators and start incorporating them into their work, they will unlock new potential in their programming, tackling tasks that were once considered challenging or impossible with traditional methods.

Generator Fundamentals

The simplicity of the `make_numbers` example serves as an ideal illustration to unravel the mechanics of generators in Python. This example elegantly demonstrates how generators differ fundamentally from conventional functions through their use of the `yield` statement.

In this example, `make_numbers` is defined as a function, but instead of returning a value and exiting, it employs `yield` to produce a series of values over time. The use of `yield` transforms the function into a generator, which, when called, does not execute its content immediately. Instead, it returns a generator object, poised to begin its execution upon the request of the next element.

The key takeaway from this example is the lazy execution nature of generators. When `make_numbers` is invoked, it doesn’t run the print statements or produce any numbers immediately. This characteristic is what makes generators particularly memory-efficient, as they compute and yield values one at a time, only when needed. The execution of the generator begins only when `next(a)` is called. Each call to `next` resumes the generator’s execution from where it last yielded a value, runs until the next `yield` statement, and then pauses again. When the generator exhausts all its `yield` statements, it raises a `StopIteration` exception, signaling that all values have been generated. This behavior is integral to Python’s iteration protocol and is how for-loops and other iterator-consuming functions know when to stop iterating.

This straightforward example is just the tip of the iceberg in understanding the full potential of generators. By changing the content within the generator function, a wide variety of tasks can be accomplished, from simple number generation to complex, resource-intensive computations. Generators offer a way to write cleaner, more efficient code, especially in scenarios where managing memory and processing time is crucial. The `make_numbers` function is a perfect starting point for grasping the concept of generators in Python. It highlights the lazy execution strategy, efficient memory usage, and the seamless integration of generators within Python’s iteration protocol, paving the way for more advanced and efficient programming techniques.

Generators in Action

Generators can be employed in `for` loops just like iterables:

```python

b = make_numbers()

for i in b:

print(i)

```Additionally, generators can be designed to yield a flexible number of values. For instance, a generator can produce equally spaced integers between a start and stop value:

```python

def make_numbers(start, stop, step):

i = start

while i <= stop:

yield i

i += step

```The key advantage here is that each number is generated only when needed, hence minimizing memory usage.

Generators in Classes

Integrating generators into classes can simplify iteration through elements. For example, the `Sentence` class can be modified to loop through words using a generator:

```python

class Sentence:

def __init__(self, text):

self.words = text.split(' ')

def __iter__(self):

for word in self.words:

yield word

```This approach negates the need for an explicit iterator or a `__next__` method.

Generators for File Processing

The utilization of generators in Python, particularly in the context of processing large files, showcases their immense value in optimizing memory usage and enhancing code efficiency. The `WordsFromFile` class is a prime example of this application, demonstrating how generators can elegantly handle data too voluminous to be loaded into memory entirely.

Generators are particularly adept at lazy loading—only processing data as and when required. This approach is vital when dealing with extensive files, as it allows the program to consume only a fraction of the file’s data at a time, significantly reducing memory footprint. The `WordsFromFile` class iterates through a file, line by line, using a generator within the `__iter__` method. This method opens the file and yields each word individually, ensuring that only one line is read and processed at a time.

This design pattern is advantageous in multiple scenarios, such as data analysis on large log files, parsing through extensive datasets, or even processing real-time data streams where data is continuously appended. By yielding words one at a time, the generator ensures that the program’s memory usage remains minimal, regardless of the file’s size. Another significant advantage of this approach is its scalability. As the size of the input file grows, the generator-based solution scales gracefully. There’s no need to redesign the data handling logic or worry about memory constraints typically associated with large files.

Furthermore, generators enhance code readability and maintainability. The `WordsFromFile` class clearly illustrates how a complex task, like file processing, can be broken down into manageable, logical chunks. This modular design makes the code easier to understand and modify, leading to better software practices and more maintainable codebases. The use of generators in file processing exemplifies Python’s capability to handle large data sets efficiently. It offers a scalable, memory-efficient solution while maintaining code readability and maintainability, making it an invaluable tool for any Python developer dealing with large files or streaming data.

Generators, Iterators, Iterables: Understanding the Differences

In Python programming, the concepts of iterables, iterators, and generators are pivotal, each playing a unique role in how data is handled and processed. Understanding these concepts is crucial for writing efficient and effective Python code, especially when working with large datasets or streams of data.

- Iterables are objects that can be looped over, allowing access to their elements one at a time. They are foundational in Python, with many built-in types like lists, tuples, and strings being iterable. The power of iterables lies in their versatility and simplicity; they provide a standard way to access a collection of items, making code more readable and maintainable;

- Iterators are specialized objects that traverse through all the elements of an iterable. They are responsible for keeping track of the current position during iteration. This is achieved through two methods: `__iter__` returns the iterator object itself, and `__next__` moves to the next element, raising a `StopIteration` exception when no more elements are available. By abstracting the iteration process, iterators allow for efficient navigation through complex data structures without exposing the underlying implementation;

- Generators are a more nuanced and powerful feature, using the `yield` syntax to produce sequence elements on demand. Unlike functions that return a single value and exit, generators can yield multiple values over time, pausing after each `yield` and resuming from there in the next call. This makes them ideal for representing infinite sequences, processing large files, or handling data streams where the entire dataset does not need to be loaded into memory.

One of the subtle yet significant differences between iterators and generators is their approach to value generation. While iterators simply return existing values, generators have the flexibility to modify and generate values dynamically. This feature is particularly useful in scenarios where data needs to be transformed or generated in response to specific conditions. Incorporating these elements into Python code brings a range of benefits. For instance, iterators and generators enable handling large data sets with limited memory usage. They also allow for cleaner and more modular code, where data generation and processing logic can be neatly encapsulated within generator functions or classes. Iterables, iterators, and generators are key components of Python that provide robust solutions for data iteration and manipulation. Their proper understanding and usage enable developers to write more efficient, scalable, and readable code, essential for tackling complex data-driven tasks in Python programming.

Conclusion

In the vast landscape of Python programming, generators and iterators stand out as essential tools for efficient and effective data handling. Their importance becomes increasingly apparent in scenarios dealing with large datasets or continuous data streams, where conventional data storage methods are impractical or insufficient. Generators, with their unique `yield` keyword, bring a novel approach to data generation. Unlike standard functions that return a single value and terminate, generators pause their execution after each `yield` and resume from where they left off, making them perfect for producing sequences of data over time without the memory overhead of storing the entire sequence. This on-demand data generation makes generators a boon in applications like real-time data processing, large file handling, and iterative data transformations.

Iterators complement generators by offering a structured way to traverse through a collection of items. In essence, they provide a roadmap for navigating complex data structures, ensuring that each element is accessed in a systematic manner. This is particularly useful when dealing with custom data types or when interfacing with external data sources, like reading lines from a file or fetching rows from a database. The integration of generators into class-based structures exemplifies Python’s versatility. It allows for more readable and maintainable code, particularly when dealing with iterable objects. The ability to iterate over objects in a memory-efficient manner opens up possibilities for handling data that would otherwise be too large or cumbersome to process.

Understanding and utilizing generators and iterators is more than a programming skill—it’s a way of thinking about data processing. It encourages developers to consider the flow of data through their applications and to design their code around efficient data access and manipulation. In summary, generators and iterators are not just features of Python; they embody a fundamental approach to handling and interacting with data in modern programming. Their proper use can lead to more efficient, scalable, and readable code, enabling developers to tackle a wide range of programming challenges with confidence and creativity.